We tested self-reported attribution. Here’s what we learned.

This article was updated Nov 2022

Browse around B2B Marketing posts on Linkedin and you’ll eventually find a discussion about attribution. Some will be claiming that you should measure as much as you can. Others will say it’s impossible to track everything, and so argue that you shouldn’t bother tracking data at all.

At Dreamdata we believe that data is about competitive advantage. It’s about knowing more than your competitors. It’s about making sure all the data that you sit on, and that can be measured, is put into the equation. This you should do to inform your decisions. To strengthen your gut-feeling and confidence.

You will never find us claiming that we can map 100% of your customer journeys. However, we might take you from knowing 5-10% of what’s going on in buying journeys to knowing 50-60-70%.

Self-reported attribution

One of the attribution tactics you might see suggested as an alternative (or supplement) to multi-touch modelling is “self-reported attribution”. Which means introducing a simple form on your conversion pages asking the user where they first encountered your brand.

Intuitively that sounds appealing, right? What was the most significant touch?

Let’s just ask the customers.

But, it’s not all roses. The critical thinkers amongst us will question the extent to which the person who fills the form is even aware of their first encounter with your brand? More often than not, they might actually not recall how they funnelled into a customer journey with you.

But, these are ultimately just opinions. So we put it to test.

We decided to test self-reported attribution

The idea was to run a test on 100 demo calls booked on our website.

We would then compare the insights we could draw from the responses to a ‘self-reporting’ question on the demo sign-up form and the insights from the data we have on Dreamdata.

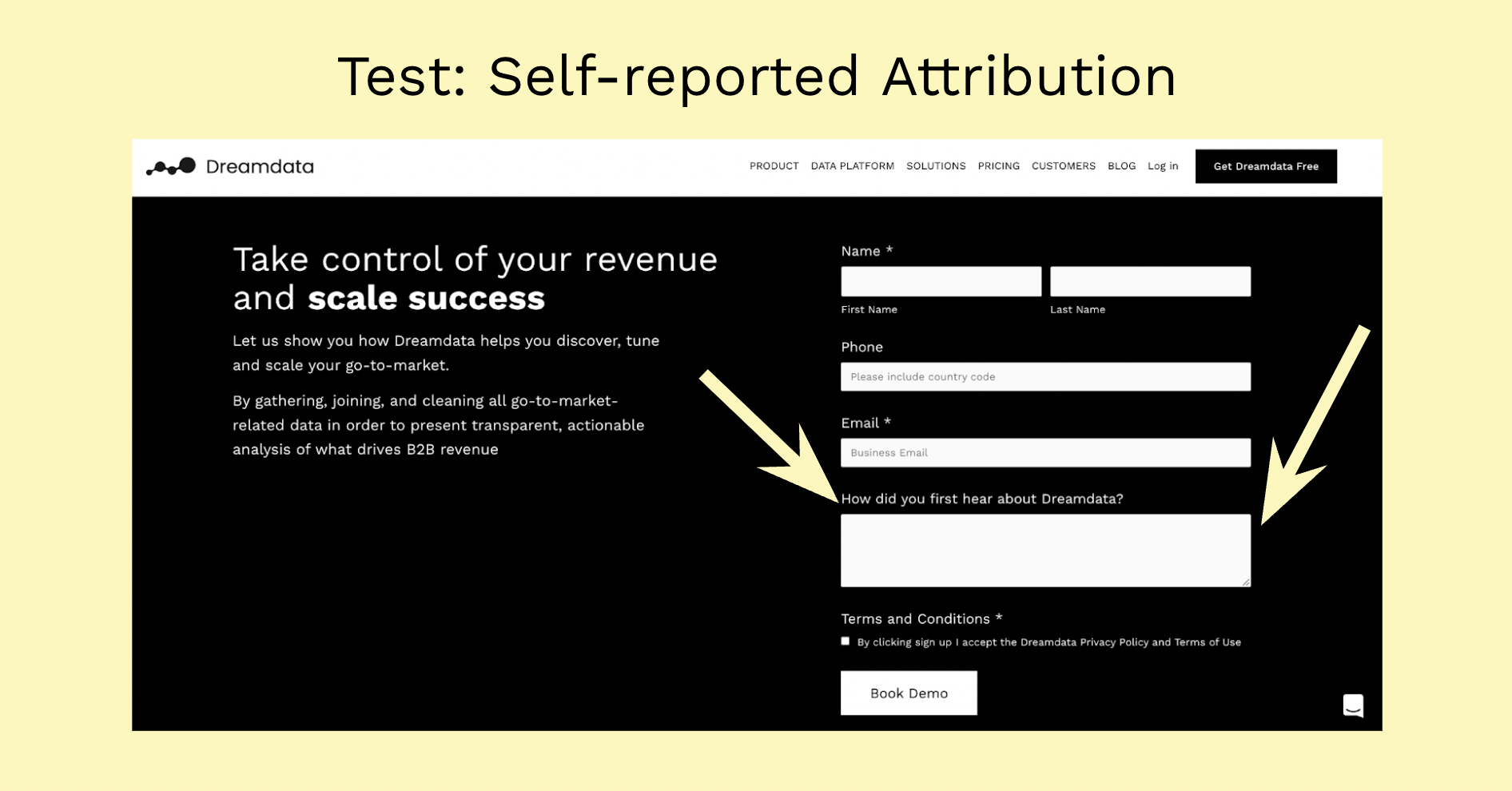

The test: a simple self-reported attribution field

We added a field asking “How did you first hear about Dreamdata?” in our demo sign-up form.

Caveat - This is certainly not a perfectly scientific testing method, there is likely more to be said about the parameters, phaseology, etc.

In any case, below we break down what we did and what we’ve concluded so far.

With this in place, we then let the test run until we had 100 form submissions.

Of these 100 submissions, roughly 70% filled the form. 30% did not. As it wasn’t set as mandatory, it’s still a pretty good form fill rate.

The analysis

First, we needed to identify whether or not the question was actually answered.

This lowered the rate of useful answers to 49 out of the 100 submissions.

Now to dig into what people actually put on the form 👀

Of those, we then tried to categorize the responses as coherently and fairly as possible:

What can we learn about self-reported attribution from this?

As far as level of analysis goes, this is as deep as we can go with self-reported attribution.

So what can we learn from the data?

We can see that Social Selling and Word of Mouth combine to play quite an important role in generating demand and pulling accounts into the funnel.

Which is encouraging data given that we’re actively trying to share knowledge/ social sell on Linkedin.

That people find us through search also makes sense. We’re creating new content for our website every week and are bidding on the most obvious search ads.

Ditto with review platforms. We can see that they influence demand and are important. Side note → we’ve now reached the LEADER category for attribution in G2’s upcoming spring report.

But that’s about as much as we can deduce from self-reported attribution.

Unless I’ve missed something obvious (let me know if I have), it offers little-to-no instruction beyond social selling and word of mouth being important sources. What content is it that ranks? Which campaign should we spend more on? What about keywords?

Multi-touch attribution with Dreamdata - the other side

Now. Let’s change gears and see what info we have from these same 100 submissions in Dreamdata.

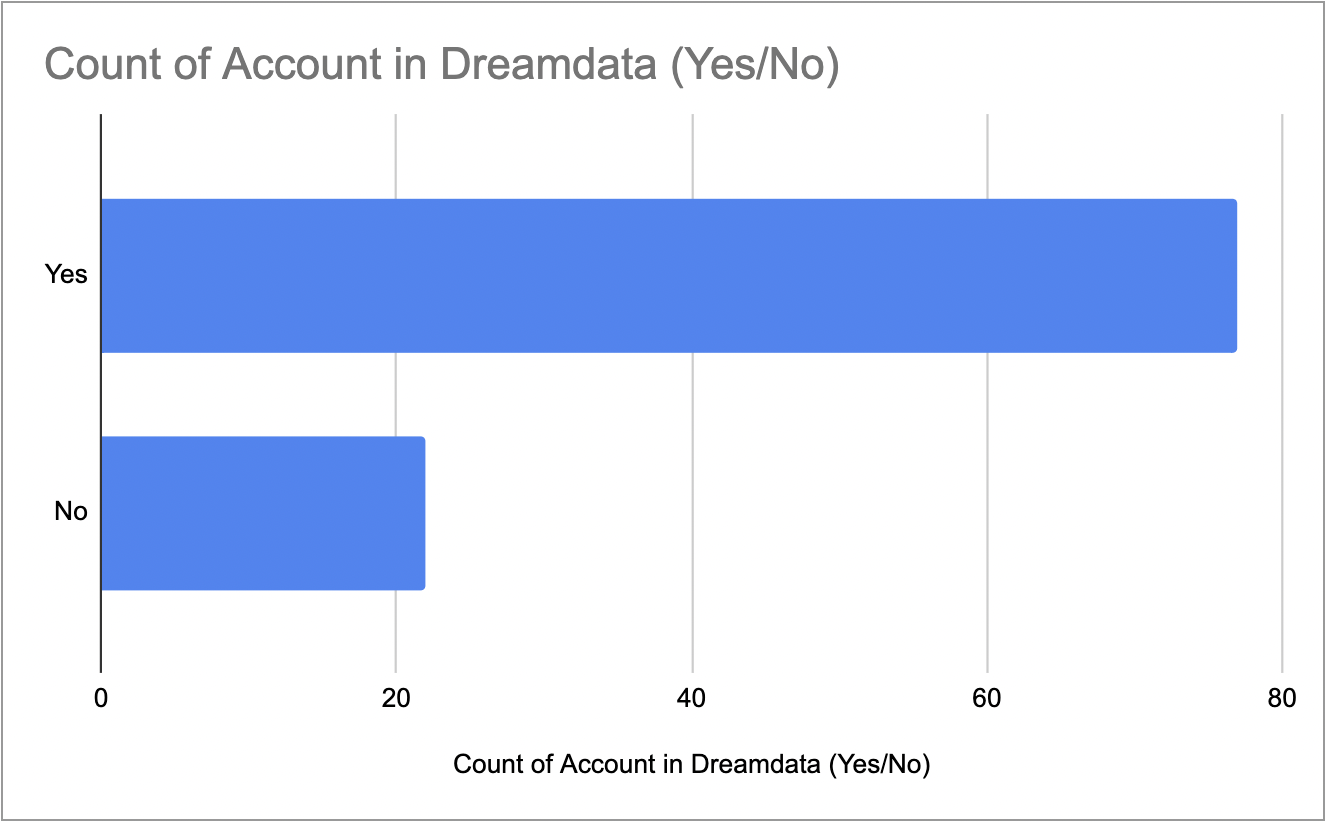

Firstly, we were able to join 77 out of 100 form submissions with existing accounts in the Dreamdata app:

This means that, thanks to the tracking we have in place, we can paint a more accurate picture of the path they took towards submitting that form to request a demo call.

We’re able to track all recordable touches that happen in the journey from first-touch to demo request and quantify that data.

Here are some average details we can get from those 77 accounts:

What immediately sticks out is just how long the journeys up to demo request are. This tells us that a lot of work goes into research before a demo call is requested.

And in my opinion, it also highlights just how difficult it can be for most people to recall exactly how they entered into your funnel. They might write down the strongest or latest memory, but that’s likely not what got them into your world.

The difference between reported first-touch and recorded first-touch suggests as much 👇

More importantly, unlike self-reported attribution, the data we get on Dreamdata is multi-touch. This means we can look into all those other touches that took place before (and after) signing up for the demo - as the image below shows.

Finally, we can then dive deeper into each of those touches. Not only do we get the Source and Channel, but we can dive down to campaign level and then compare how these are performing across the board.

And this is not just for for that user, but the entire account. After all, the individual converting might not know that the “hey have you checked Dreamdata out?” (word of mouth) from a colleague itself originated from an encounter on LinkedIn.

Conclusion

The narrative of self-reported attribution appealed to our curiosity. We wanted to see how it would work in practice. What could we learn from the insights? How much could we action compared to what our tracking allows us to action?

The results were interesting but also, to us at least, unsurprising.

The responses to the self-reported attribution form were generally vague and focused on unspecified channels, e.g. “Google”.

Not entirely surprising. After all, it relies on users being able to accurately recall the journey up until that point, which is not exactly easy - not to mention that it ignores touches by other stakeholders in the account.

But the thing is that it’s more than a simple inaccuracy. And here’s self-reported attribution’s dark underbelly:

If you’re only relying on self-reported attribution you can be left blindfolded, without much instruction on what to do more or less of, and, in the worst case, be misled entirely.

We will admit though that if it’s the only data point you have available, self-reported attribution does offer some overall confirmation of your go-to-market activities. But there are close to no instructions at the more granular, actionable level.

For now, we will delete the form fill option again.

Do let us know if you have had similar or different experiences with this.